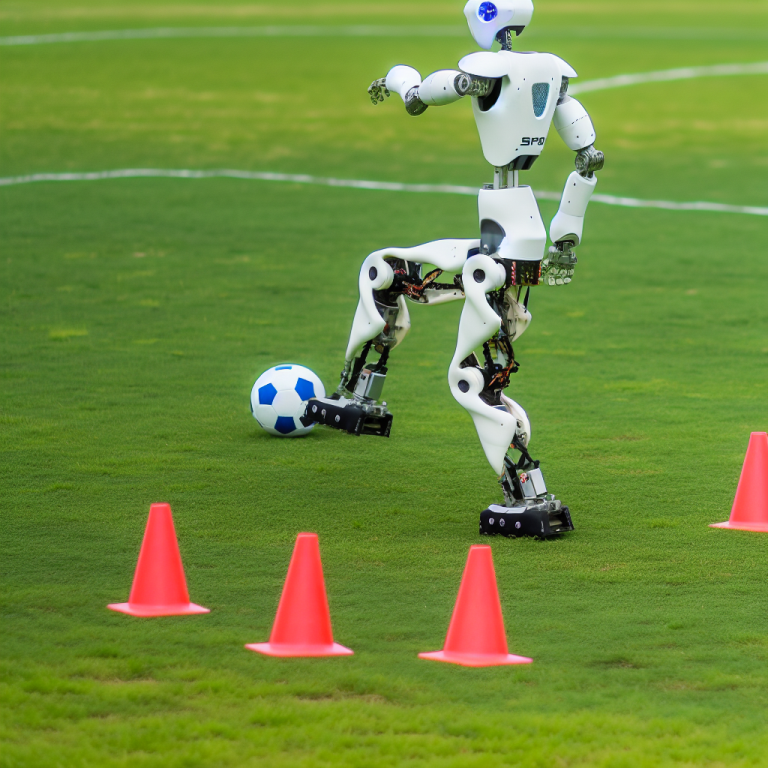

A recent study conducted by Haarnoja et al. delved into the realm of deep reinforcement learning to enhance the motor skills of bipedal robots through a humanoid soccer framework. Traditional control methods have struggled to adapt to specific tasks in the real world, making it challenging to develop robust motor skills in robots. The researchers successfully utilized deep reinforcement learning to create a platform for controlling humanoid robots and setting up one-on-one soccer matches. The robots displayed emergent behaviors like the ability to recover from falls and participated in tactical maneuvers such as defending the ball against an opponent. This framework allowed the robots to move faster compared to a scripted baseline controller, showcasing its potential for more intricate interactions involving multiple robots.

The goal of the study was to explore whether deep reinforcement learning could produce advanced and secure movement capabilities for a cost-effective, miniature humanoid robot that could seamlessly integrate into elaborate behavioral strategies. By employing deep reinforcement learning, the researchers trained a humanoid robot to engage in a simplified one-on-one soccer game. The final result was an agent with sturdy and dynamic movement skills, enabling it to swiftly recover from falls, walk, turn, kick, and transition between these movements seamlessly and efficiently. The agent also learned to predict ball movements and block adversary shots, adapting its tactics to specific game scenarios in a manner that would be impractical to manually engineer.

The training process occurred in a simulated environment, and the learned behaviors were effortlessly transferred to real robots without prior adaptation. A strategic combination of high-frequency control, dynamics randomization, and perturbations during training facilitated a successful and high-quality transfer. During experimental evaluations, the trained agent outperformed the scripted baseline by walking 181% faster, turning 302% faster, requiring 63% less time to recover from falls, and kicking a ball 34% faster.

The ongoing goal of AI researchers and roboticists is to develop intelligent agents with embodied intelligence, capable of acting with agility, dexterity, and a comprehensive understanding of their surroundings similar to animals or humans. Recent advancements in learning-based techniques, particularly deep reinforcement learning, have significantly accelerated progress towards this objective. While elaborate motor control tasks have been efficiently addressed for simulated characters and physical robots, less emphasis has been placed on humanoids and bipedal robots due to stability, safety, degrees of freedom, and hardware constraints.

The integration of deep reinforcement learning has proven to be beneficial, yielding more versatile and efficient control methodologies for humanoid robots, facilitating tasks encompassing agility, stability, and comprehensive motor capabilities beyond basic skills such as walking, running, and jumping.

In the realm of sports, soccer stands out as a domain that mirrors human sensorimotor intelligence, exhibiting complexities that pose unique challenges for robotics. The researchers narrowed their focus on simplified one-on-one soccer matches to demonstrate the comprehensive skill set of the humanoid robots through a sensorimotor full-body control strategy incorporating proprioceptive and motion capture observations. The project sets the foundation for continuing research into enhancing humanoid robots’ agility and proficiency, pushing the boundaries of what these machines can accomplish in physical environments.

Source: https://www.science.org/doi/10.1126/scirobotics.adi8022